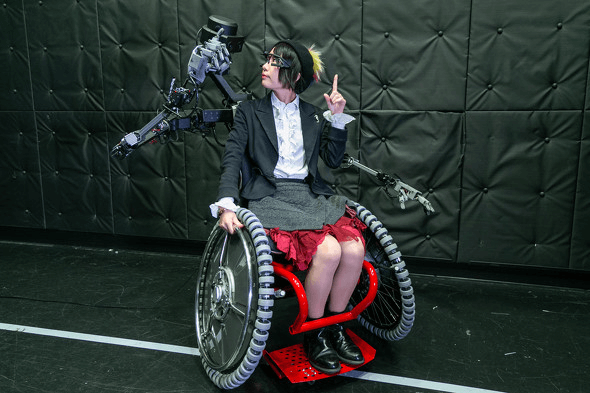

“SlideFusion” is a VR enabled wheelchair system designed by graduate students at Keio University. The system was built to assist and reduce the physical and cognitive burden on people who need to use wheelchairs. A VR integrated robot with two arms is mounted behind the wheelchair allowing a remote operator to control and manipulate the robot’s movements through VR.

SlideFusion combines an omnidirectional, custom-built power-assisted wheelchair with an avatar robot integrated into the wheelchair. There is also an eye-tracking module for wheelchair users to use.

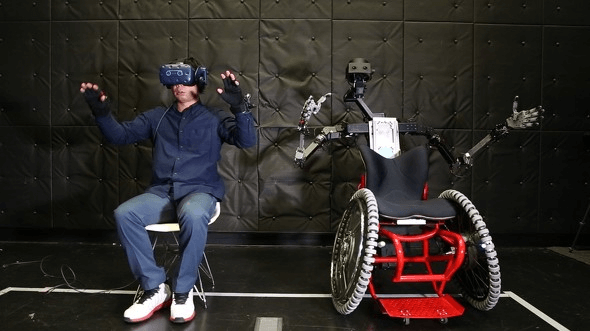

The robot has two arms, a face with a stereoscopic vision camera and binaural hearing device, and an upper body (chassis) connecting everything together. A remote operator performs remote controlled movements using a VR HMD and a data glove. The delay between the remote operator and the robot is less than 100 ms. To ensure the safety of the wheelchair user, the robot’s arms are restricted to a specified operating range so that they cannot interact or impact the wheelchair user’s body.

The “SlideFusion” avatar robot is being controlled by a remote operator using a VR HMD and hand tracking data glove.

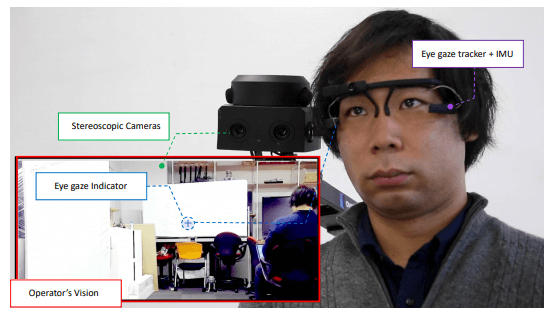

Wheelchair users can wear an eye-tracking module embedded with IMU (Inertial Measurement Unit) sensors that track and follow a person’s head movements. The gazing data collected by the eye-tracking module is sent to the remote operator simultaneously with visual feedback from the robot’s stereoscopic vision camera. The images from both the robot’s stereoscopic vision camera and the wheelchair user’s eye-tracking module are superimposed allowing for the remote operator to view in real time what the wheelchair user is viewing.

When a wheelchair user uses the eye-tracking module, the remote operator can recognize the next point of interest of the person in real-time. Based on gaze information, the remote operator can then respond with the corresponding movement to move the wheelchair and person to that location. This system is designed to help the remote operator to quickly understand the point of interest of the wheelchair user and provide appropriate support according to the situation without having to communicate verbally. A demonstration video shows examples of how to navigate with finger pointing and picking up a cup from a desk.

Japanese original article:https://www.itmedia.co.jp/news/articles/2008/19/news065.html