This article shows how to create 3D models from images and text using the AI tools GENIE and TripoSR.

GENIE

Luma AI’s GENIE is an AI service that generates 3D models from text input; unlike 3D modeling, it requires no specialized knowledge or software, making it easy for anyone to create 3D models.

Feature

- Text to 3D Easily create 3D models in natural language.

- Fast Generation Generate multiple 3D models in seconds.

- Editing: Generated 3D models can be edited and selected from multiple variations.

- Commercial use: You can use your 3D models for commercial purposes.

How to use

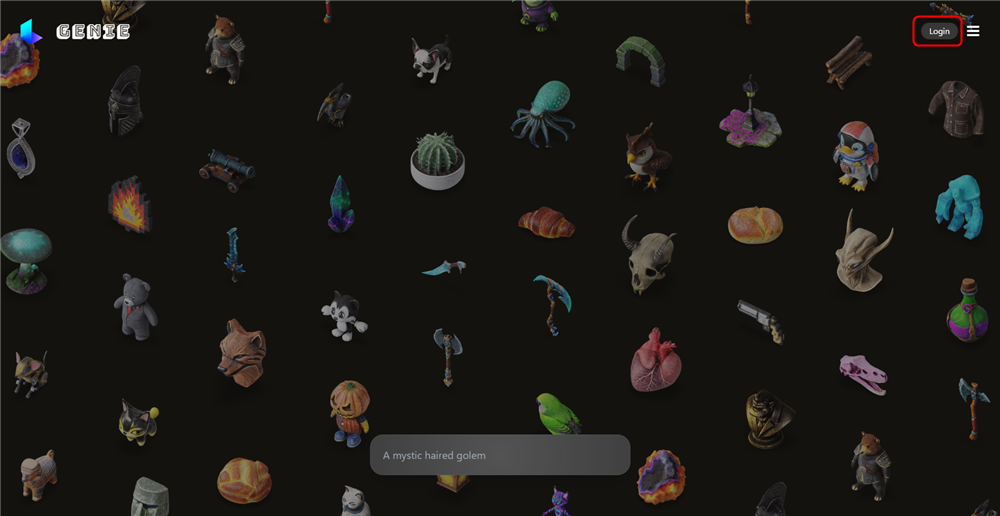

First, access GENIE and create an account.

Click Login in the upper right corner of the screen and choose how to log in (if you do not have an account, click Login as well).

Login

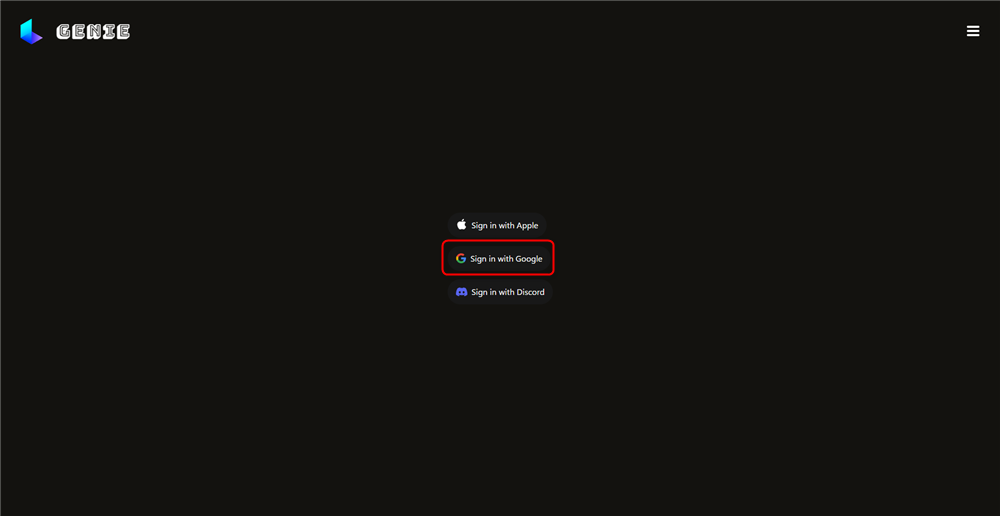

This time, select Sign in with Google and sign in with your Google account.

Sign in with Google

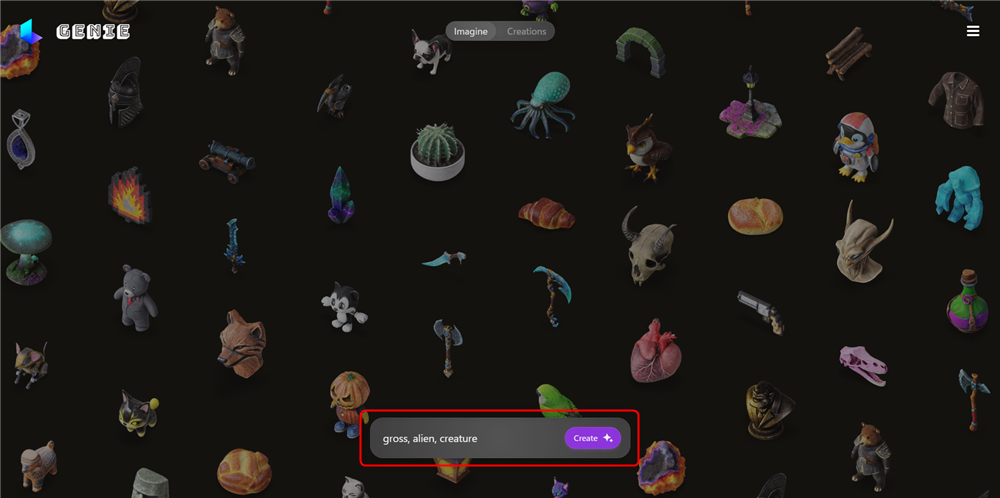

Once logged in, enter the content you wish to generate in English in the box at the bottom of the screen.

Enter some words

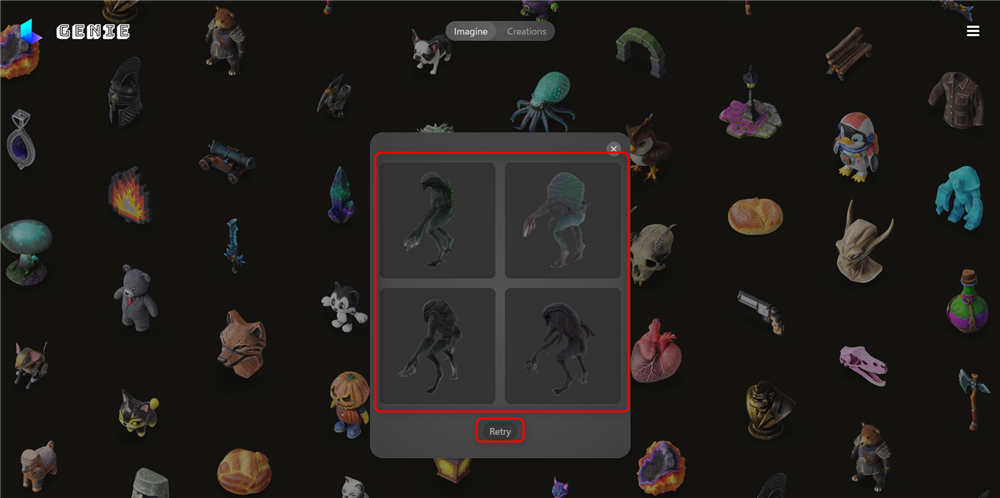

Once entered, click Create, wait a moment, and four models will be generated.

Create

If you do not like the model, click Retry to generate it again.

Once you decide on a model and click on it, you can set the detailed items of the model.

Settings

Settings

- Make High-Res

Texture resolution can be increased (may take some time).

- Variations

You can return to the model selection screen.

- Retpologize

When Retpologize is enabled, you can choose the resolution of the model: High increases the number of polygons and data size of the model, while Low decreases the number of polygons and data size.

- Fromat

You can choose the type of model to download.

fbx, gltf, usdz, blend, stl, obj

Once you have set the items, click Download to download the model.

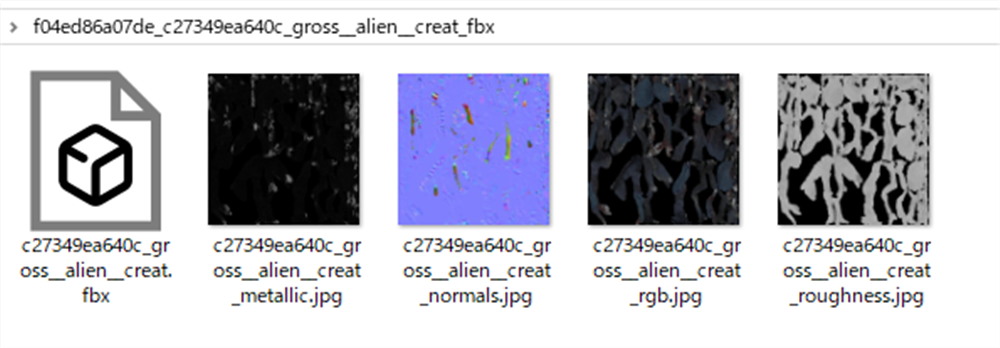

Once downloaded, the model data and various textures are stored.

Texture

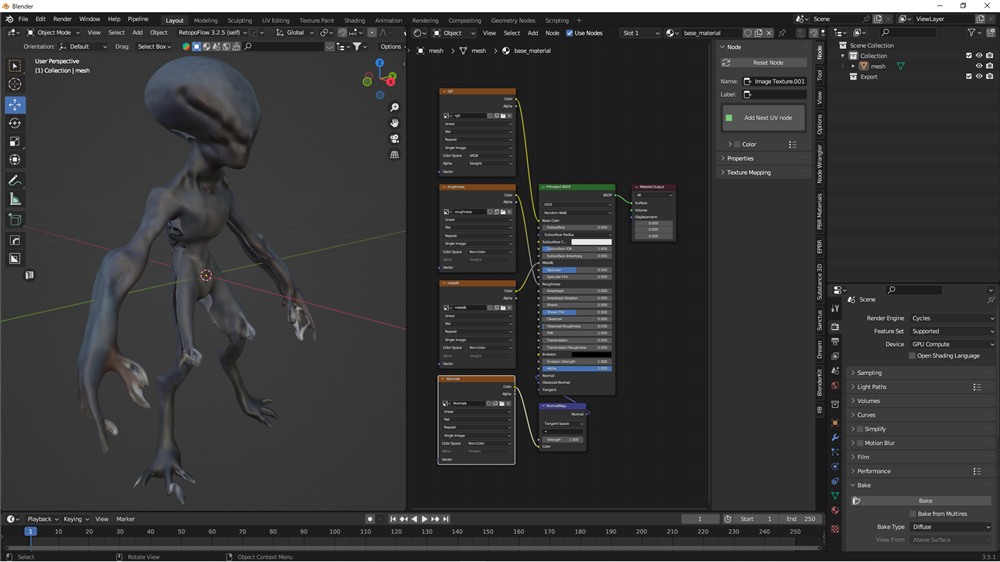

Check out what you downloaded in Blender.

Check out in Blender

TripoSR

TripoSR is a service that allows users to easily generate 3D models from photos in a web browser. Although it can be used locally, we will explain how to use it on the web in this article.

First, let’s access the TripoSR demo page.

First, access the TripoSR demo page.

https://huggingface.co/spaces/stabilityai/TripoSR

Let’s start with a sample.

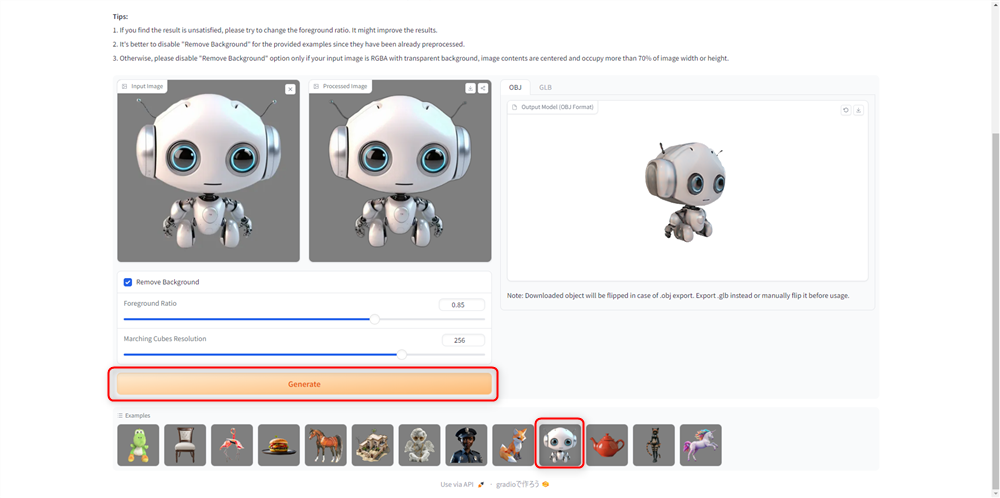

Select the sample of your choice, click Generate, and wait a moment to generate a 3D model.

TripoSR

Settings

- Remove Background

If the image contains a background, it will automatically remove the background. It is better to turn off this item to improve the accuracy of the model, so if possible, you should prepare an image with a transparent background and turn off Remove Background.

- Foreground Ratio

Determine what percentage of the objects on the screen will be modeled. The higher the number, the less space will be available, so adjust accordingly.

- Marching Cubes Resolution

You can change the resolution of the model.

You can choose from 32 to 320, the higher the number, the more accurate the model will be.

Once the model is generated, you can select the export format from OBJ or GLB and download it from the download button.

How to use

To use your own images, drag and drop them into the “Input Image” area, or click to select images from a folder.

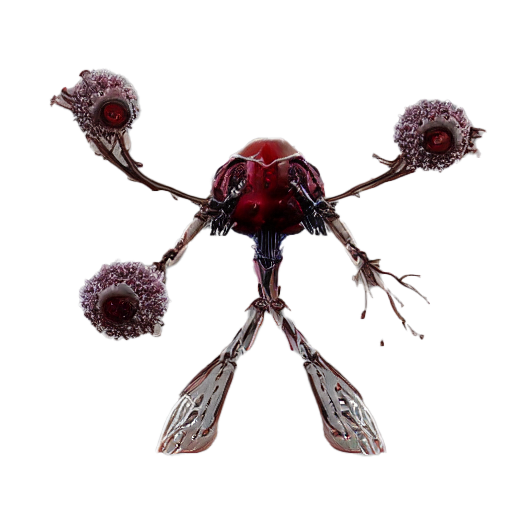

In this case, we will use an image of a pre-generated creature? that have been generated in advance.

Sample image

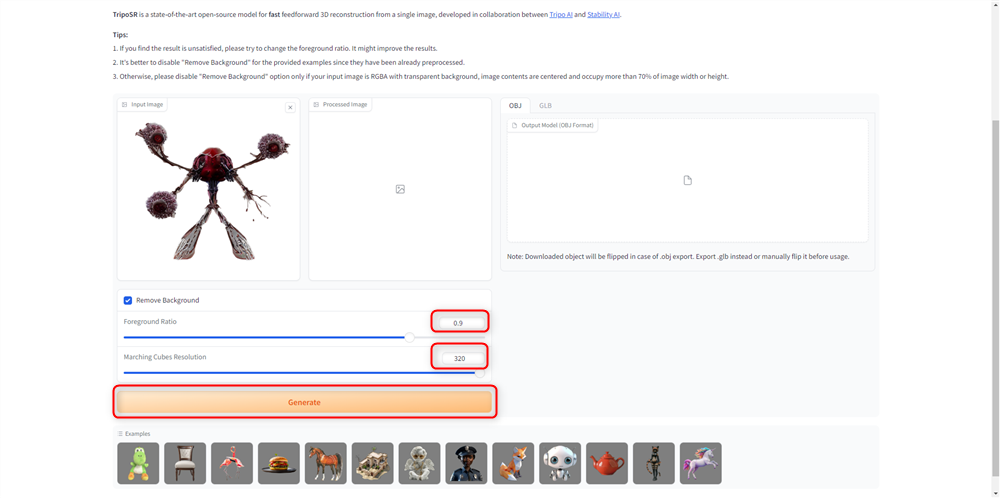

Drag and drop the image and set the Foreground Ratio to 0.9 and the Marching Cubes Resolution to 320.

Foreground Ratio=0.9、Marching Cubes Resolution=320

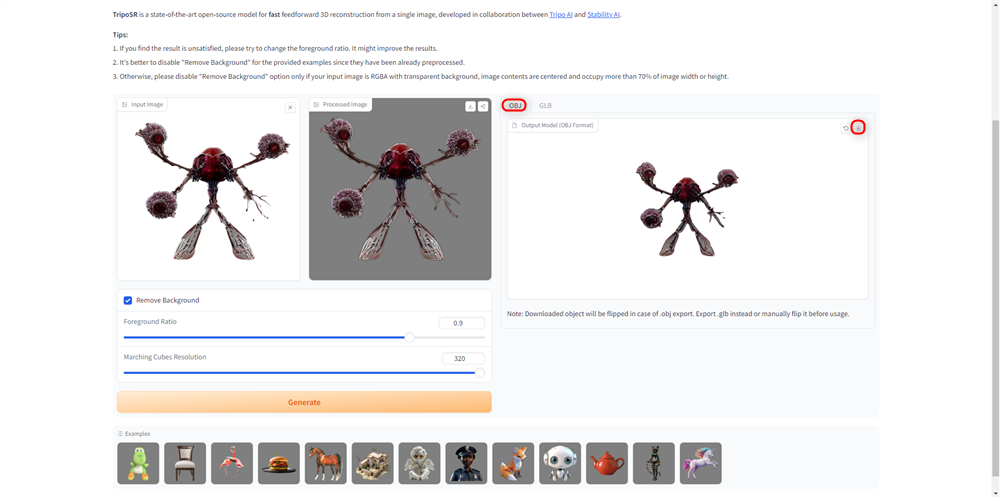

Click Generate and wait a moment to generate the model, then select OBJ and download it.

Download OBJ

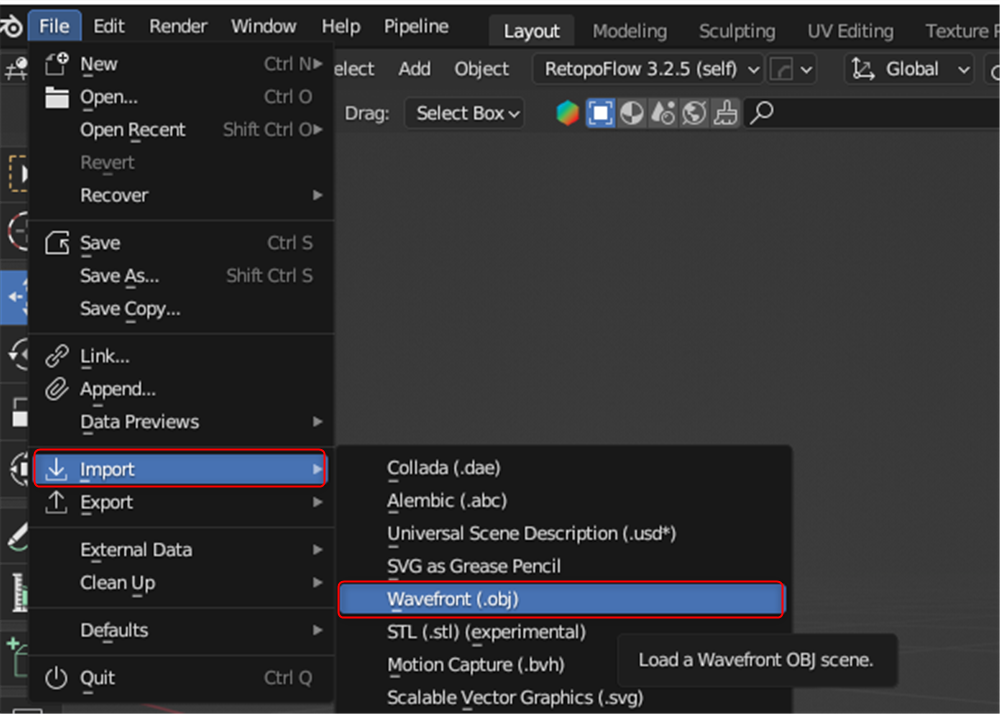

Let’s check the downloaded contents in Blender.

Start Blender, go to File→Import, click Wavefront(.obj), and select the downloaded file.

File→Import→Wavefront(.obj)

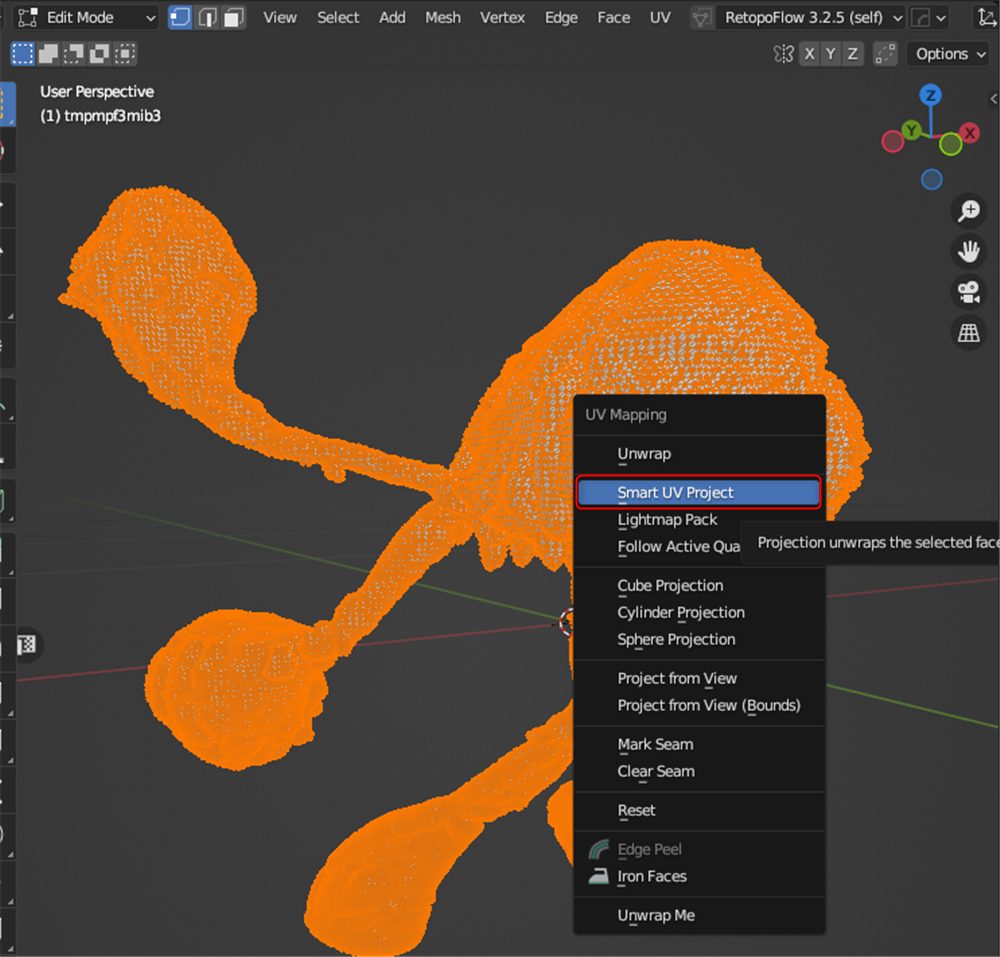

Select the model, press the TAB key to enter edit mode, press the A key to select all, press the U key to select Smart UV Project, and click OK.

Smart UV Project

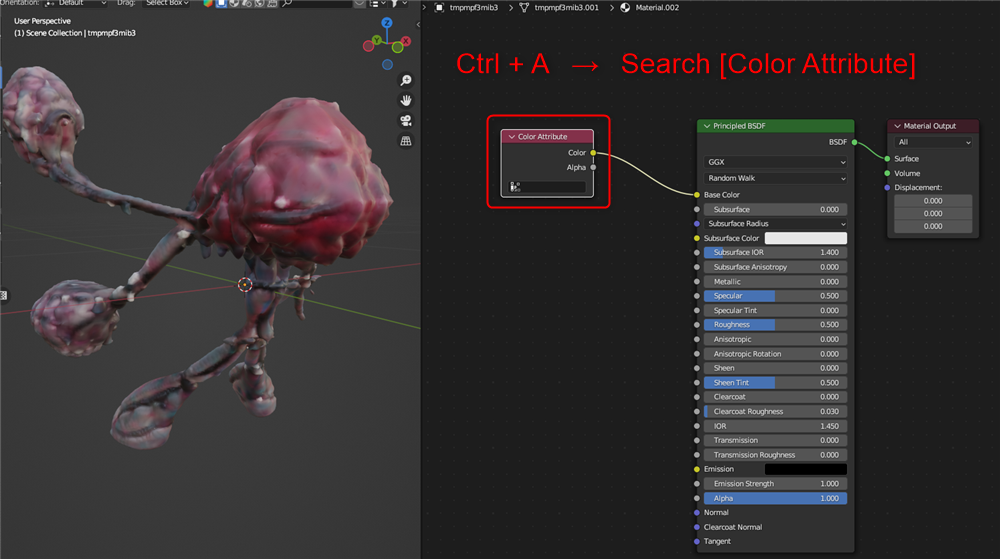

Add a new material from the ShaderEditor and add a Color Attribute node to display the texture.

Color Attribute

Bake the texture since it is not possible to bring the texture to other software at this point.

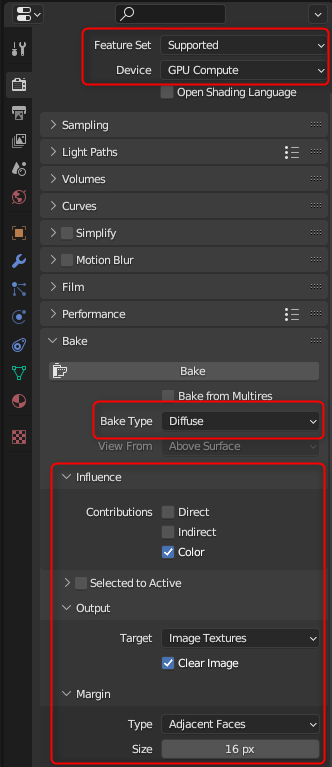

Change the RenderEngine to Cycles and change the settings in the Bake section as shown in the image below. Here, Diffuse (base color) is selected for Bake Type, but you can bake other maps by changing the Type.

Bkae settings

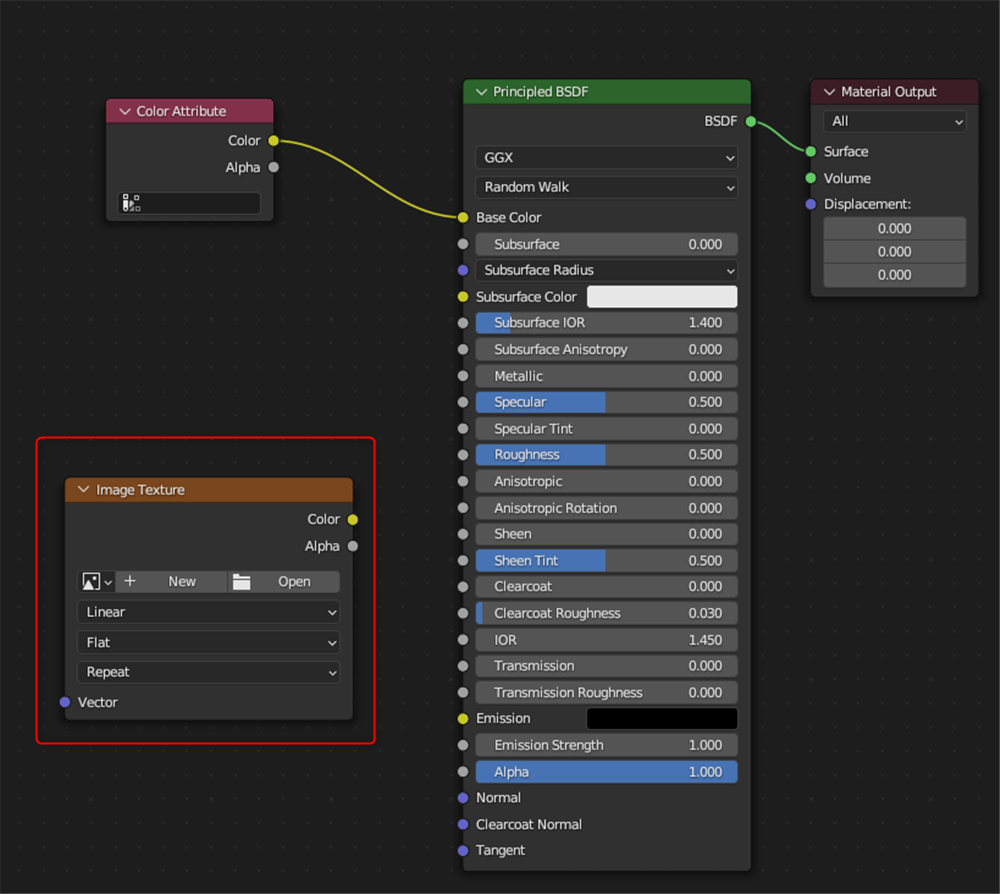

Return to ShaderEditor and add an Image Texture node.

Add Image Texture

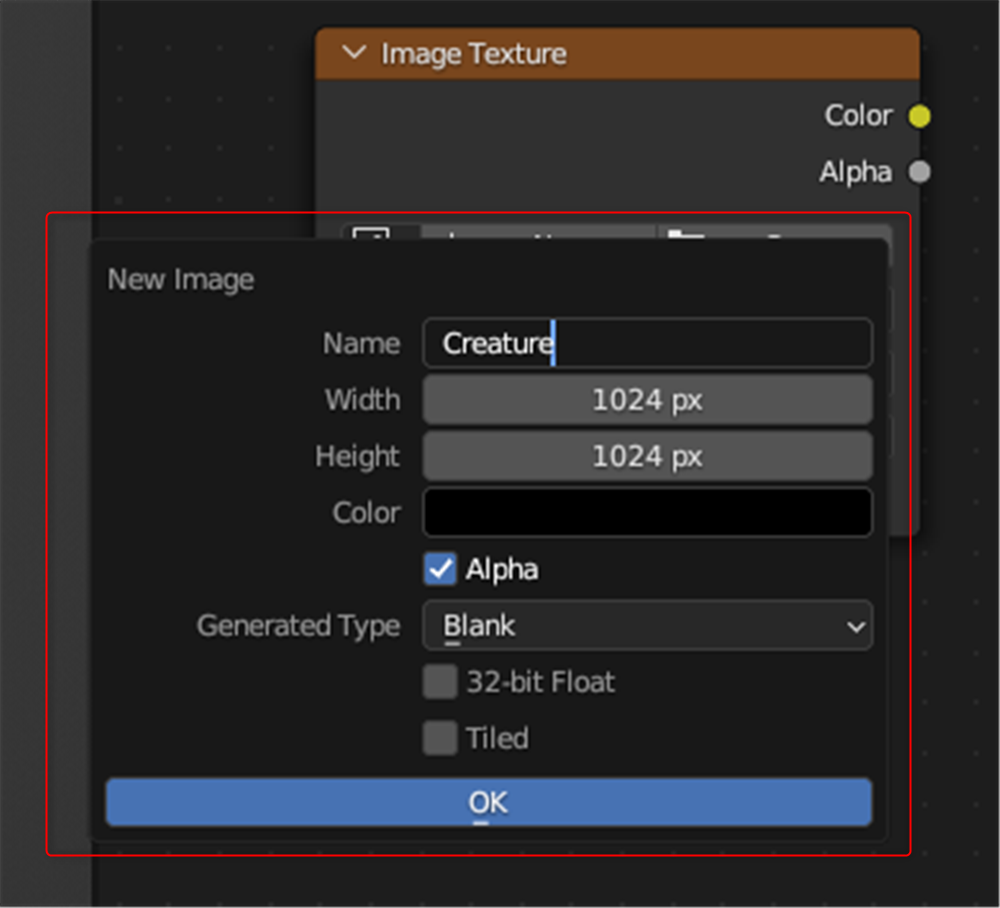

Once added, click New to create a new image.

This time change the Name to Creature and click OK.

New image

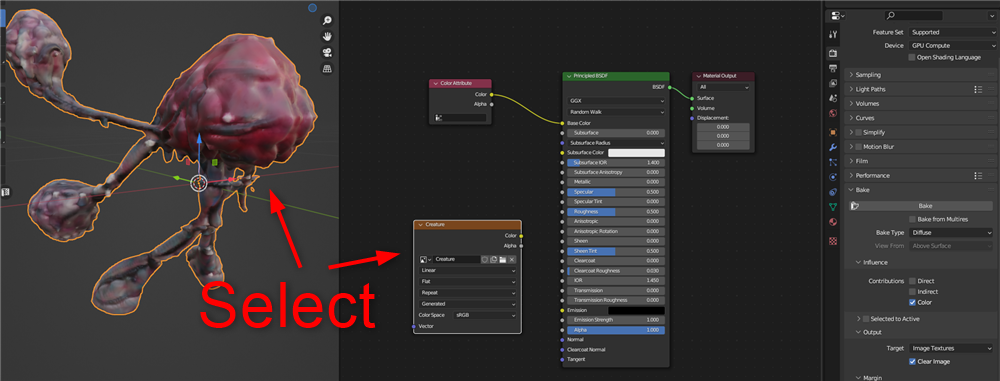

Once this is done, select the object and the Image Texture node (the outer side is orange) and click Bake.

Select

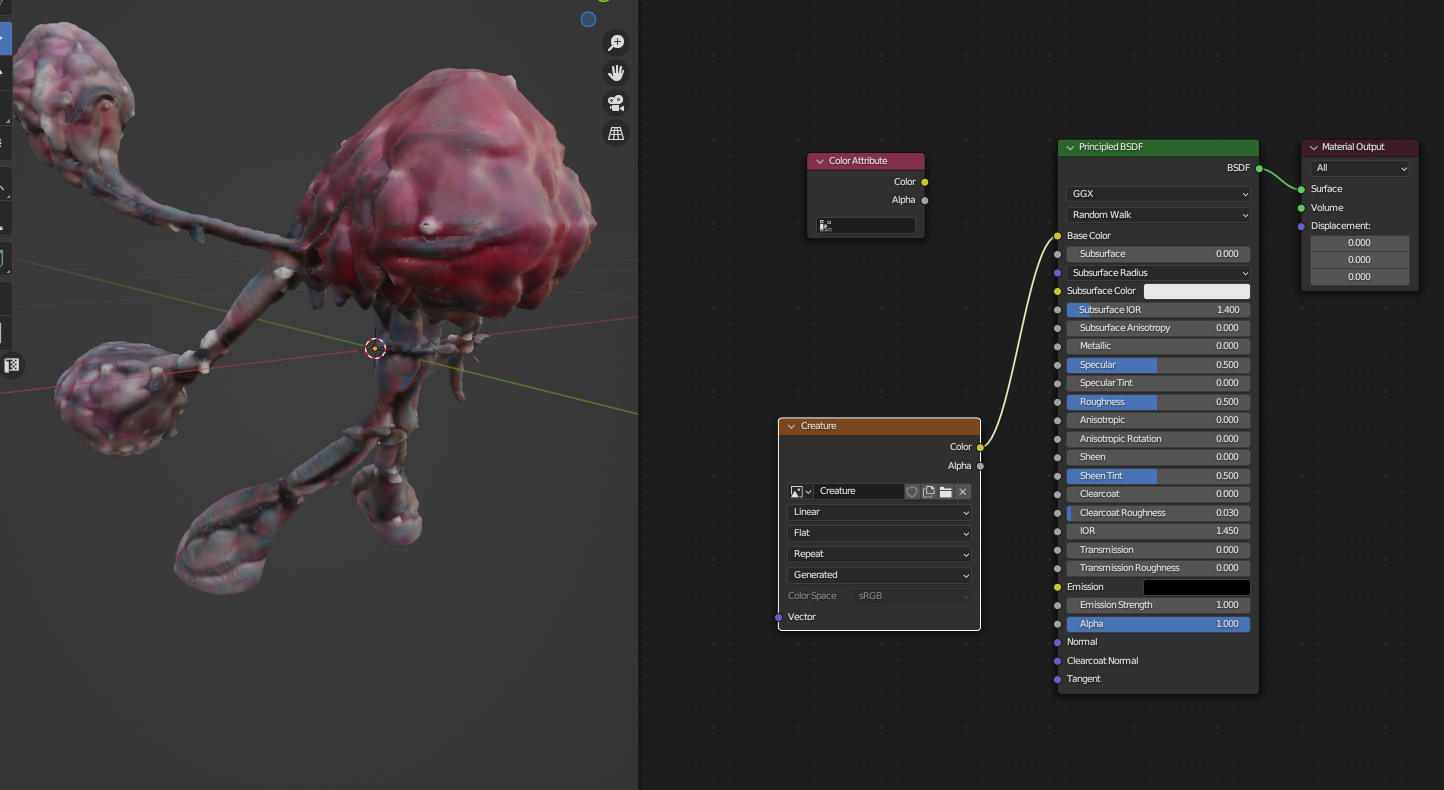

When baking is complete and the Image Texture node is connected to the Base Color, the texture is displayed correctly.

Base Color

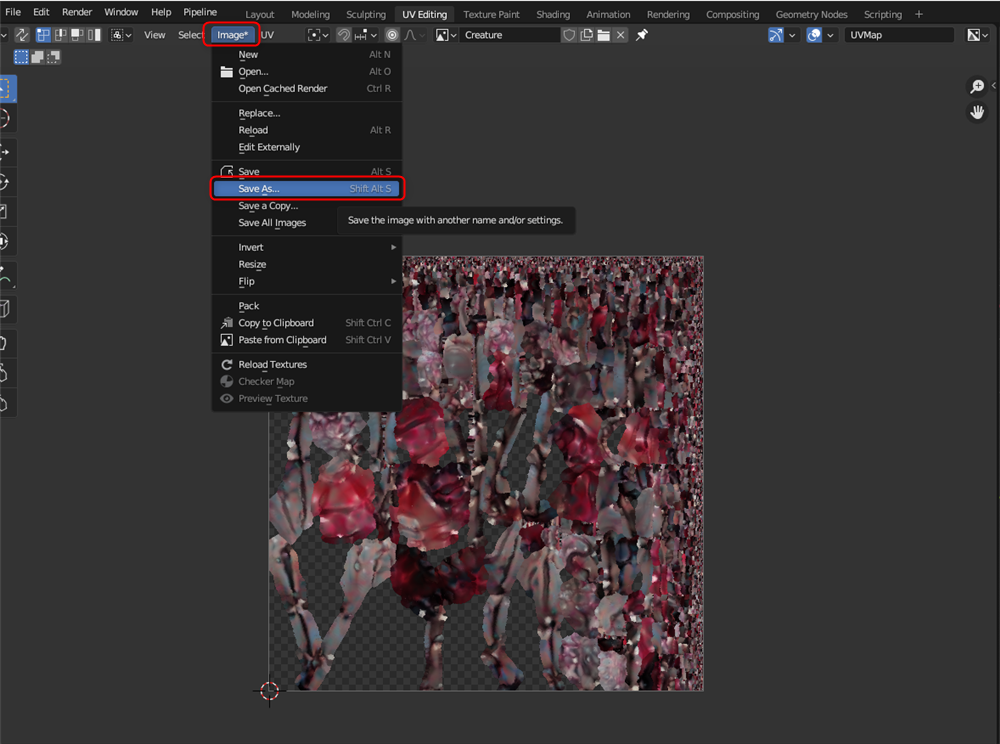

Go to the UV Editing window on the screen and select the baked image.

Click Imgae and Save As to save the image to a folder of your choice.

Save texture

This completes the work in Blender.

Animating with MIxamo

If the generated model is humanoid, it can be animated with Mixamo. In this case, we will animate a model created with Luma AI.

For more details on how to use Mixamo, please refer to the following article.

Here is how it looks animated in Mixamo.

Dance animation

Uploading to STYLY

Let’s actually upload the scene to STYLY and use it.

This time we will upload the Unity scene directly to STYLY.

account